The Threat of AI Fabricating People for Malicious Purposes (Deepfakes) – and What We Can Do About It

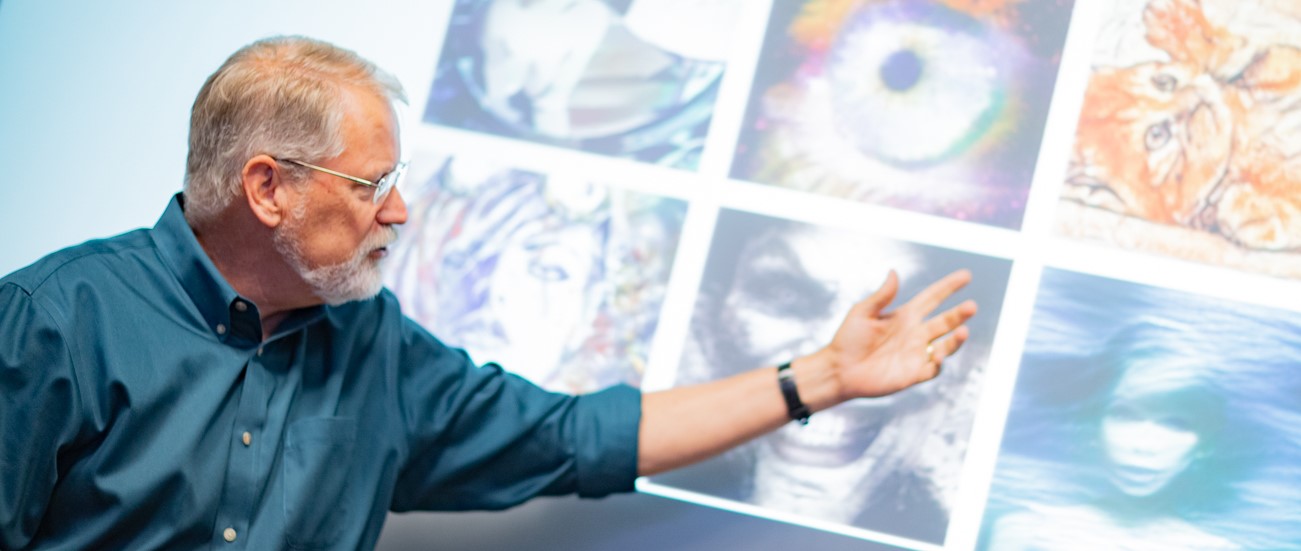

On September 27, 2019, the University of Maryland (UMD) College of Information Studies and UMD Future of Information Alliance hosted experts Dan Russell, Danielle Citron and Hany Farid as they discussed the uprising of deepfakes online and in the media. “Deepfake” is a technique using artificial intelligence to fabricate images and videos, most often referring to use for malicious purposes. Russell, Citron and Farid discussed ways to detect and prevent deepfakes spreading further into the mainstream, and how deepfakes can be used in non-harmful ways, such as the use of deepfakes in virtual reality experiences or the colorization of old photographs.

Human nature enables us to have strong, emotional reactions to imagery and audio, especially when they are negative or salacious. This idea, coupled with our connection to social media, prompts us to share negative, and even fake news, faster. “In many respects, we are the bug in the code – we make these things go viral,” said Dr. Danielle Citron, professor at Boston University.

Advances in artificial intelligence, machine learning and big data have allowed deepfakes, including face swapping and lip-syncing techniques, to become more difficult to detect. The task of sifting through accurate media now becomes more challenging and time-consuming.

The weaponization of deepfakes can have a massive impact on things like our economy, justice system and national security. Since 2016, misinformation and disinformation campaigns have emerged drastically across the globe, with 70 countries around the world, including the United States, sponsoring these campaigns in order to manipulate elections, according to a study published by the University of Oxford. American researchers also found that after the 2016 U.S. presidential election, fake news was spread ten times faster than accurate news, especially on social media.

“Things that happen in the digital world lead to really horrific things in the physical world – violence, often against women and underrepresented groups, election tampering, fraud – yet we sort of shrug our shoulders and say ‘it’s just the internet,” said Hany Farid, author and professor at the University of California, Berkeley. “One of the things we have to think about is how do you control this technology so that it’s accessible, useful, but doesn’t engage my adversary in creating better fakes.”

Despite using deepfakes to cause harm, they can also provide us with all kinds of positive capabilities. Deepfakes allow us to virtually fly over global landmarks, compose music without learning to play an instrument, and create more captivating imagery through the use of filters and other special effects.

Despite using deepfakes to cause harm, they can also provide us with all kinds of positive capabilities. Deepfakes allow us to virtually fly over global landmarks, compose music without learning to play an instrument, and create more captivating imagery through the use of filters and other special effects.

So, how do we detect deepfakes? There are a few heuristic practices we can take into consideration as technology becomes more advanced, such as being aware of the timing of certain events and performing reverse internet searches of suspicious images and videos. In a more definitive sense, we can also analyze soft biometrics, which are distinct characteristics that are not like fingerprinting or iris scans, but the ways in which we move and talk. Characterizing these soft biometrics can be used to determine pseudo videos and imagery of a person.

Google announced in late September that it released a collection of deepfake videos for the purpose of providing researchers a data set for developing synthetic video detection methodologies. The Wall Street Journal has adopted trainings for its journalists to aid in identifying deepfakes. These are just some of the ways companies and other institutions are combating negative deepfakes.

“If you have a big, emotional response to something, stand back and say ‘Why am I having this deep emotional response to this particular image or particular video?’,” said Dan Russell, senior researcher at Google. “If you’re going to be a literate citizen in this society, you have to know what a deepfake is, you need to know how they’re made, and the impact they have on us individually and as a culture.”

Speakers:

Dan Russell, Senior Researcher, Google

Dr. Danielle Citron, Boston University

Dr. Hany Farid, University of California, Berkeley

Moderators:

Dr. Sandy Banisky, Professor, Philip Merrill College of Journalism, UMD

Dr. Ira Chinoy, Professor, Philip Merrill College of Journalism, UMD